The Northeast Fisheries Science Center's annual sea scallop research survey uses a towed sampling device called the HabCam. It collects approximately 5 million images of the ocean bottom off the Northeast United States. Scientists and volunteers then manually examine an astonishing 100,000 of these images, roughly 2 percent of the number gathered. They focus on identifying just four targets: sea scallops, fish, crabs, and whelks.

So, a wealth of data is going uncollected owing to the sheer volume available and just how labor-intensive pulling it out of images can be.

Researchers have turned toward finding ways for machines to help identify sea life in these images, faster and more efficiently than humans can. This would improve population data for sea scallops. By more thoroughly examining each image, all kinds of information about other sea life and their habitats can also be captured.

Enter Dvora Hart, an operations research analyst at the Northeast Fisheries Science Center. Hart has been at the forefront of estimating sea scallop populations numbers from images taken by devices like the HabCam. The upcoming 2020 sea scallop assessment will once again use improved population data collected from images.

“HabCam gives us photos of animals in their natural environment without disturbing them; however, much of the information in the images is not collected because human annotators can only mark a small percentage of the available images,” said Hart. “Automated annotators can mark all the images and, given proper training, can identify a multitude of different targets—not just sea scallops, fish, crabs and whelks.”

Hart is part of an interdisciplinary team that developed the world’s first advanced automated image analysis software for the marine environment. The Video and Image Analytics for the Marine Environment, VIAME for short, uses convolutional neural networks—a recent advance in artificial intelligence. These networks teach computers to recognize species and features of their habitats in the images taken by the HabCam.

The work is so significant that Hart and her team won a 2019 Department of Commerce gold medal for their work. This recognition is the highest honor award offered to department employees.

How to Train Your Machine

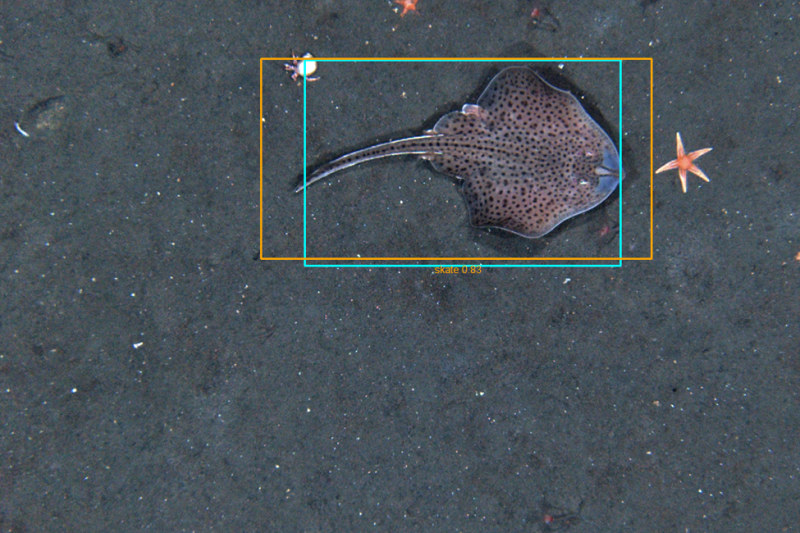

A computer can “see” an image, but it must be trained to identify what it is “looking” at. To train VIAME to read the HabCam images, human analysts draw a box around the sea life they find in each image. They then identify what’s in the box (an “object” to VIAME) with a label. The team uploads these labeled, or “annotated,” images, and VIAME is trained by showing it what each image represents.

Initially VIAME gets a training set of about 40,000 annotated images. It takes the program between 1 and 7 days to “learn” to recognize the objects in the images, then it can identify these objects in new images.

This year, Hart brought in reinforcements to help annotate the training images. Ayinde Best and Hayden Stuart, students participating in this year’s Partnership in Education Program, created a new set of more than 4,000 densely annotated images to enrich VIAME's learning.

They focused on objects that have not been priority targets, such as sea stars, bryozoans, anemones, hermit crabs, moon snails, and skate egg cases. This work is a start on a new collection of data on important aspects of the marine environment. For example, it will look at large populations of the sea star Astropecten americanus, a predator of young sea scallops.

Smoothing “Fuzzy” Data

In population assessments for fish, data underlying the analyses are primarily collected directly from the fish that are caught and examined. Scientists have a strong basis for assuming that the sample is 100 percent reliable. Images annotated by humans are also highly accurate as samples go, but few in comparison to the large number of images available.

VIAME produces samples that are more “fuzzy” than those produced from the images by human analysts. Many marine organisms, flounders and squid for example, can blend into their surroundings by adjusting their appearance to match it. Sea scallops can be covered with organisms that live on their shells, providing a convenient disguise. Several objects in an image can be located near one another; the water may be cloudy; the bottom may have a lot of rocks. There are any number of factors that can obscure the objects. This can result in VIAME categorizing a boxed area as empty when it isn’t.

This raises an interesting question: How do you create a population estimate from a group of imprecise or “fuzzy” variables? Answer: statistics to the rescue.

Led by Jui Han Chang, Hart and other colleagues compared the data collected from the same images by human analysts and by VIAME. Then they analyzed four different ways of calculating an estimate of objects in an image, combining data collected by human analysts and by VIAME. The results were used to get a corrected estimate of the abundance of organisms. That estimate is then applied to all of the collected images across a range of habitats and environmental conditions.

“Automated image analysis has the potential to reduce labor costs, since automated annotators can reduce requirements for manual annotators,” says Hart. “But it also can do it faster—several images per second compared to 1-2 per minute for manual annotators—and annotate many more images for more potential targets, not only scallops and fish, but many other organisms on the seafloor that are not usually surveyed. This will improve our understanding of the seafloor communities and help guide ecosystem-based fisheries management.”